Aim

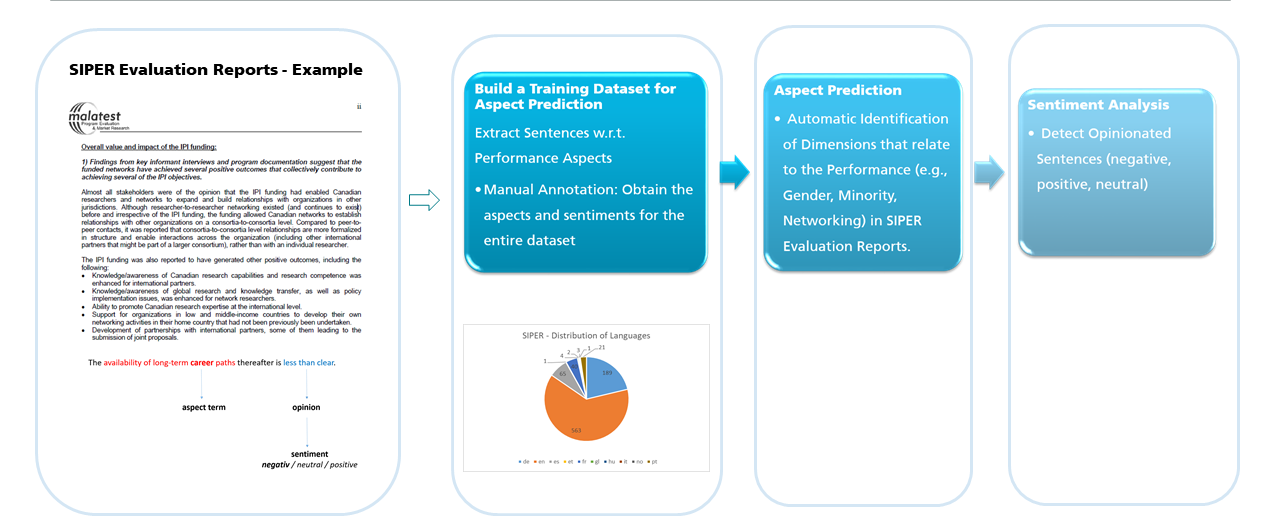

SIPER, the Science and Innovation Policy Evaluation Repository, is a repository of science and innovation policy evaluations. Currently the database consists of approx. 900 evaluation studies. Its aim is to categorize evaluation reports according to the major evaluation dimensions and funding features. So far, researchers have done this categorization manually.

The aim of the ISDEC-SIPER project is to test the possibilities and limitations of automated procedures for the content analysis of evaluation reports. The challenge relates to the specific nature of this type of document: Evaluation reports differ strongly with regard to their structure, language and content (especially addressed evaluation dimensions) and there is no common reporting structure.